Big Question

What is Artificial Intelligence Getting Wrong?

Trevor Paglen analyzes how your selfies fuel artificial intelligence

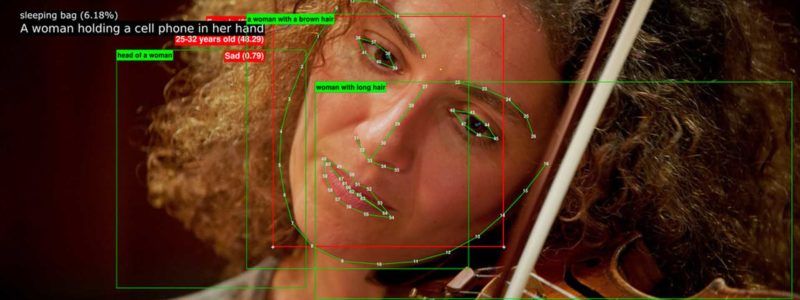

I’m asking: what is an image? What are the consequences of using a thousand pictures of an apple to in face define apples for an Artificial Intelligence database? This leads to social and political consequences—what is an image of a woman, what is an image of a man—these are highly political questions.

Magritte challenged the relationship between image and label, and this bears a different weight in 2019 than in the 1920’s. At AI NOW, I take an archeological approach to visual training sets–large archives of vernacular photography–that define human emotion, gestures, and different kinds of people. I’m looking at how this imagery is categorized, and the politics that are nested within these taxonomies. For example, there is data that categorizes the human face, by gender and by race. The categorization for gender was either zero (man) or one (woman), which feels like a kind of erasure of queer and trans communities.

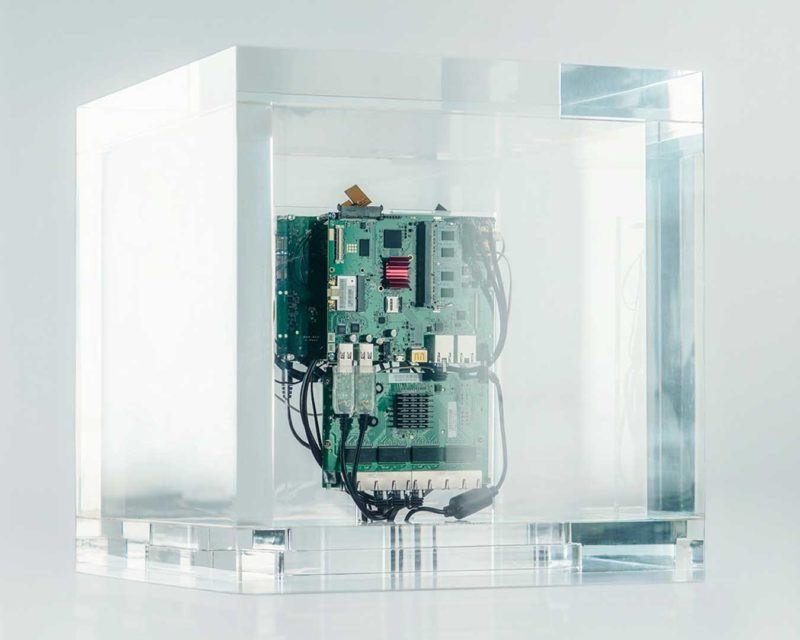

Digital platforms like Flickr, Facebook, and Instagram create an architecture to share photos that respond to the moment, but behind the scenes those images are being used to fuel powerful AI systems, and begs the question: what kind of spaces do we want to create?

Trevor Paglen